Cellular Au-Tonnetz: A Unified Audio-Visual MIDI Generator

Introduction

Traditional approaches to integrating visuals with music often involve sound-reactive systems where visuals respond to audio input. While effective, these systems typically treat sound and visuals as separate entities, limiting the potential for a truly unified audio-visual creation experience. This post describes a novel tool that transcends traditional boundaries by generating sound and light simultaneously, offering a cohesive platform for music creation that is both interactive and immersive.

Interactive tools have a significant impact on music education and creative expression, enabling users at every level of ability to explore musical concepts in an accessible manner (see Frid, 2019). By integrating sound and light generation, the tool fosters a deeper understanding of musical harmony and structure, aligning with theories on the benefits of multi-sensory learning environments.

The inspiration for this project stems from artists like Max Cooper, Four Tet, and collectives like Squid Soup, who integrate visuals directly into the music-making process, creating immersive experiences where sound and light are intrinsically linked. Their work highlights the potential of synchronized audio-visual systems to enhance live performances and engage audiences on multiple sensory levels.

Here, we introduce a unified audio-visual music creation tool that leverages the Tonnetz (a geometric representation of tonal space) and cellular automata to generate evolving musical patterns and synchronized light displays. The system is built using embedded electronics and IoT technologies, providing real-time control and scalability for multi-unit installations.

Background and Inspiration

Tonnetz and Its Applications

The Tonnetz, or "tone network," is a conceptual lattice diagram representing the relationships between pitches in just intonation, initially developed by Leonhard Euler in the 18th century (see Cohn, 1997). It visually maps out harmonic relationships, such as fifths, thirds, and their inversions, providing a geometric representation of tonal space.

In music theory, the Tonnetz has been instrumental in analyzing harmonic progressions and voice leading, particularly in neo-Riemannian theory. Its applications extend to computational musicology and the development of musical interfaces, where it serves as a foundation for creating intuitive layouts that reflect harmonic relationships.

This work leverages the Tonnetz to map musical notes in a two-dimensional grid, facilitating the use of cellular automata to generate harmonically coherent musical sequences. By utilizing the inherent harmonic proximities within the Tonnetz, it enables the automatic generation of evolving melodies through simple rule-based algorithms.

Cellular Automata in Music

Cellular automata (CA) are discrete, abstract computational systems that have been employed in various fields for modeling complex behaviors. In music, CAs have been explored for algorithmic composition, offering a means to generate musical structures based on simple, local interactions.

Synesthetic Experiences in Music

Synesthesia, a phenomenon where stimulation of one sensory pathway leads to involuntary experiences in another sensory modality, has long intrigued artists and scientists. In music, synesthetic concepts have been applied to create multi-sensory experiences, linking sound with visual elements such as color and light. Composers like Alexander Scriabin explored the association between musical notes and colors, aiming to create a unified sensory experience. Others have combined Tonnetz approaches and color mapping for video game music generation.

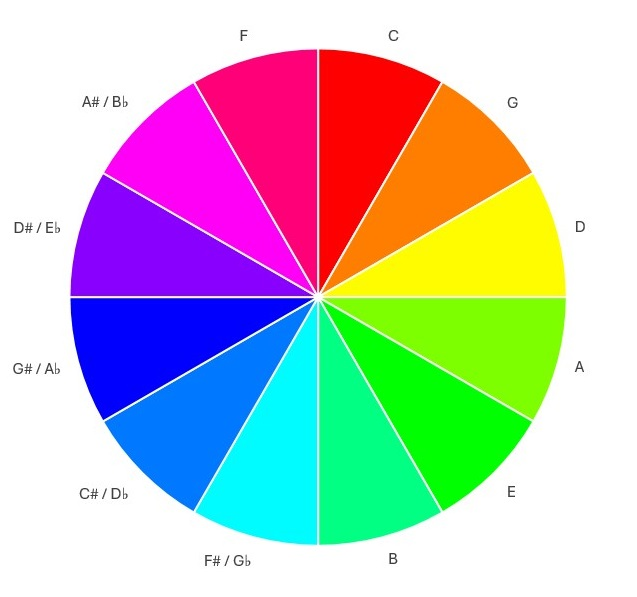

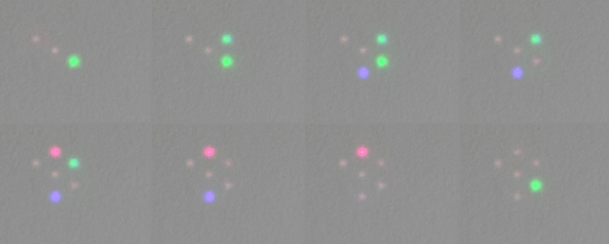

This project draws inspiration from these ideas, integrating light directly into the music-making process by associating specific hues with musical notes on the Tonnetz grid. This integration enhances the user's engagement and provides a visual representation of harmonic relationships. Figure 1 demonstrates one of the many possible mapping choices that evenly space the RGB color palette for straightforward hardware realization.

Inspiration and the Role of Modern Music Production

The idea to create a system that could act as a virtual orchestra, generating evolving compositions based on cellular automata, was inspired by a video on how Radiohead and Hans Zimmer collaborated to create the soundtrack for Blue Planet II. Their innovative use of orchestration and technology to craft immersive soundscapes inspired the development of an electronic orchestra, albeit on a smaller scale.

The goal was to create a system where each virtual orchestra member, represented by a point in a 2D array, could contribute to a dynamic, ever-changing composition. By limiting each member to playing only when no neighboring members were active, a sense of movement and progression in the music could be maintained. This approach allowed for a focus on sound design, knowing that the underlying structure would remain musically coherent.

Discovering the Tonnetz

During the early stages of development, the concept of the Tonnetz was discovered while researching geometric music theory. The Tonnetz, a means of arranging notes by their proximity to harmonic neighbors, provided an elegant alternative to the traditional piano keyboard layout, which can feel esoteric and unintuitive to non-musicians. By combining the Tonnetz with the array of virtual orchestra members, it became possible to move beyond simple, single-tone outputs. The proximity of harmonic neighbors in the Tonnetz allowed for the automatic generation of unique, evolving melodies through cellular automata rules. This discovery was pivotal in advancing the project from a single-tone soundscape generator to a tool capable of producing complex, layered musical compositions.

Integrating Synesthetic Elements

The association of colors with musical notes enhances the multi-sensory experience of the system. Drawing inspiration from Scriabin's color theories, each note on the Tonnetz grid was linked to a specific hue, creating a visual manifestation of harmonic relationships. This synesthetic integration not only enriches the user experience but also serves as an educational tool for understanding music theory.

System Design and Implementation

This section presents a comprehensive description of the technology developed for the latest iteration, TZ5, focusing on its architecture and functionality. The TZ5 system is designed to provide a desktop audio-visual music creation experience by integrating web technologies, Wi-Fi connected embedded systems, and algorithmic composition techniques.

System Architecture Overview

The TZ5 system comprises four main components:

- Web Application: Captures user inputs and provides configuration parameters, saved in a MySQL database.

- Control Board: Handles the generation of music and visual outputs based on parameters from the web application.

- Audio Synthesis: Generates the actual audio output from MIDI signals.

- Hexagonal LED Array: Generates the light output from the control board's addressable LED signals.

Web Application

The web application serves as the user interface and configuration manager for the TZ5 system. Developed using HTML, JavaScript, PHP, and SQL, it allows users to adjust parameters through sliders, buttons, and other controls, which are then stored in an SQL database. Key functionalities include providing an interactive user interface, backend processing via PHP, and serving data to the control board via JSON.

Control Board

The control board is the core of the TZ5 system, built around the ESP32-S3 microcontroller. It is responsible for generating the musical and visual outputs based on the parameters received from the web application.

Orchestra Initialization

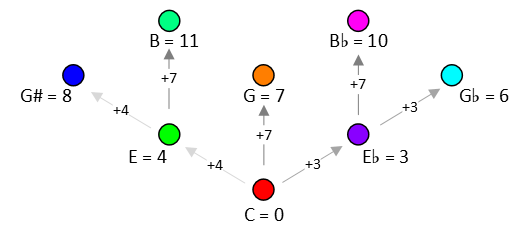

The controller initializes the Tonnetz grid by assigning MIDI numbers to each orchestra member, building up from the bottom of the grid. Figure 3 illustrates this assignment.

The assignment of MIDI numbers allows for harmonically coherent note generation, as neighboring cells in the grid are harmonically related.

Network Controller & Cellular Automata Algorithm

The network controller handles communication between the control board and the web application. The Cellular Automata (CA) algorithm determines the states of the virtual orchestra members. It uses parameters like looping functionality (replaying sequences of coordinates), neighbor counting methods (local vs. extended), and population limits to evolve the composition.

The algorithm operates in a loop: updating orchestra members, selecting coordinates (random or looped), applying population limits, checking neighbors, applying CA rules, and finally managing MIDI and LED outputs.

MIDI and LED Controllers

The MIDI controller sends note on/off messages to audio synthesis software or hardware. The LED controller updates the RGB LEDs based on the active cells, using the HSV color model to map notes to specific hues.

Audio Synthesis Subsystem

The audio synthesis subsystem generates audio from MIDI signals, either via a Digital Audio Workstation (DAW) like Ableton Live or a hardware synthesizer.

LED Panel Subsystem

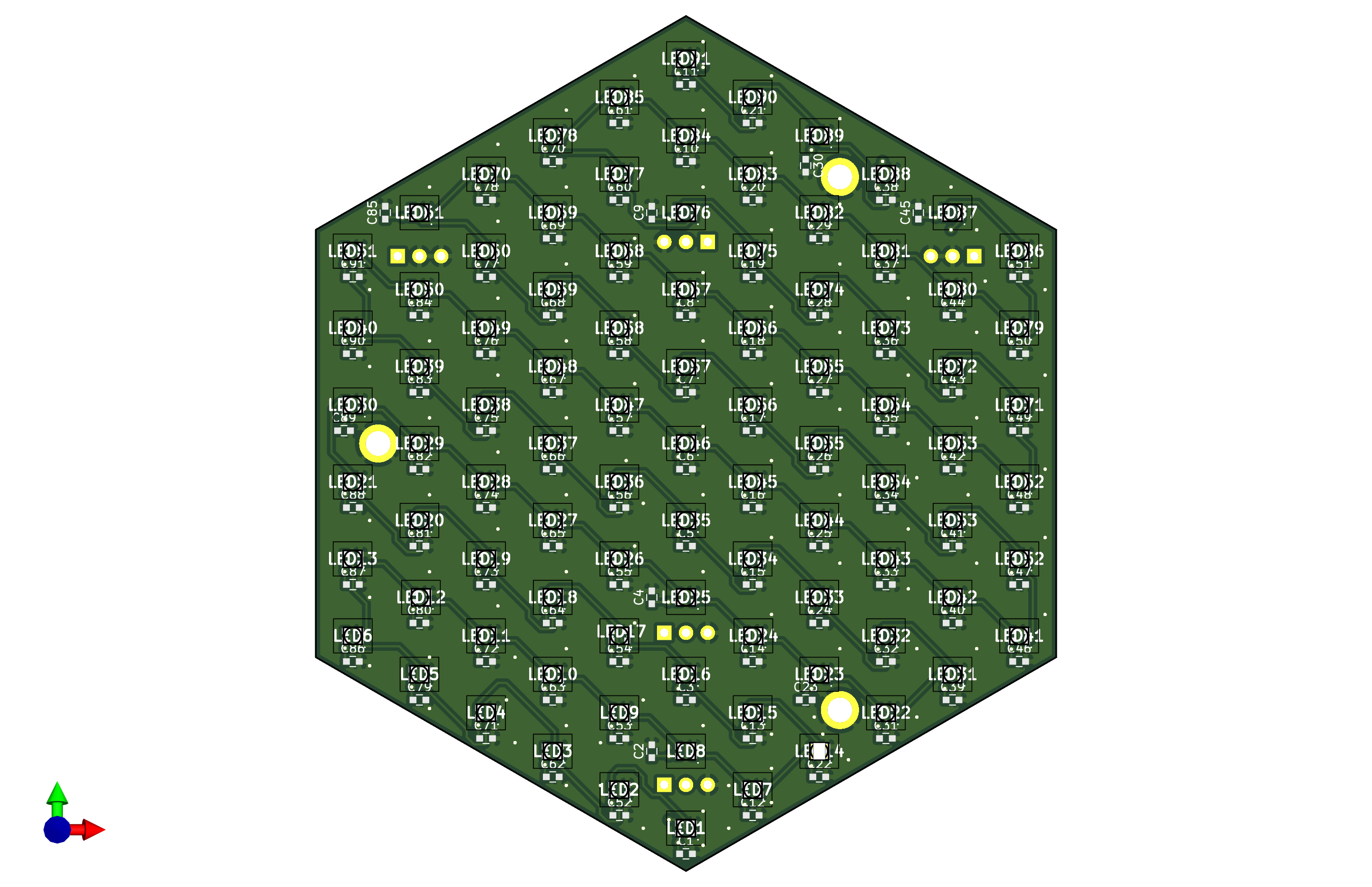

The control board can drive various LED panel configurations.

91-LED Custom PCB Panel: A 10cm circuit board with 91 2020 RGB SMD LEDs arranged in a hexagonal grid.

70cm Plywood Panel: A larger panel utilizing waterproof LED strips mounted on plywood for expansive visual fields.

Results and Demonstration

An example of a performance can be viewed here. The system's capabilities are showcased through a separate video demonstration.

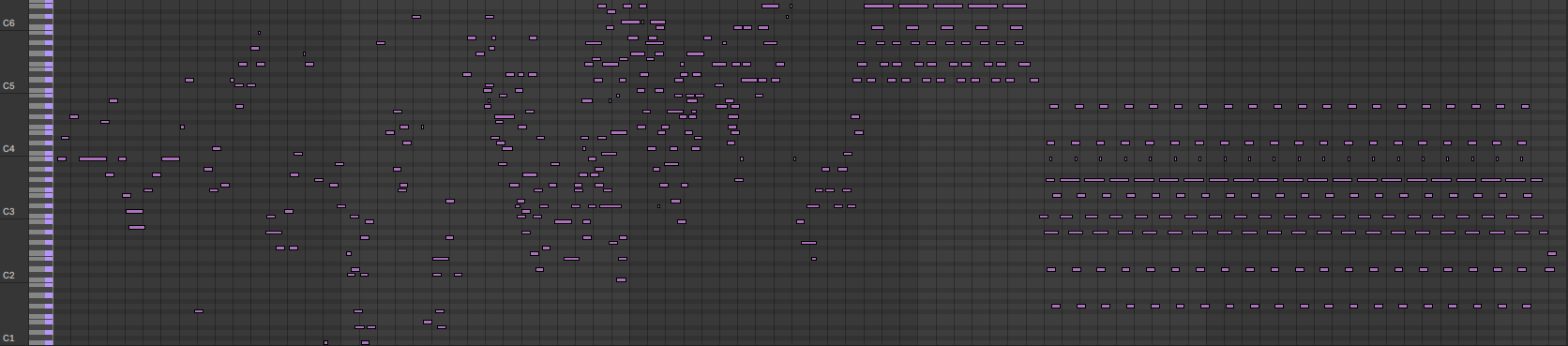

The system features real-time control, evolving compositions, looping, musical scale/chord progression constraints, and scalability across multiple units. The MIDI data for the central unit can be seen in Figure 7.

Initial State

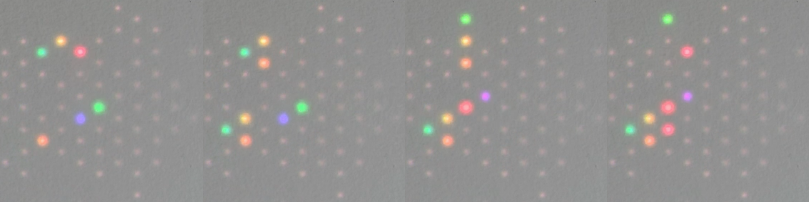

The system is provided an initial state via the web application. Figure 8 shows the first few transitions from the initial state as the device is taken out of reset.

Maximum Neighbors

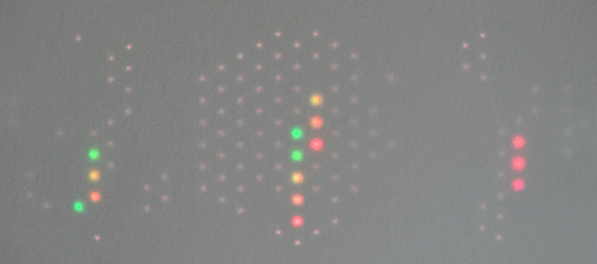

The maximum number of neighbors parameter determines how many local orchestra members are allowed to activate. Figure 9 shows four transitions where the maximum neighbors parameter has been increased from 2 to 6, causing a shift from strings of notes to clusters.

Multiple Units

The web application allows independent control of multiple units. Figure 10 shows additional units contributing to the ensemble, each with distinct parameters.

Feature Summary

| Feature | Description |

|---|---|

| Real-Time Control | Hold in Reset, Initial State Setup, Random Re-initialization |

| Evolving Compositions | Max/Min Notes, Neighbor Counting (Local/Extended), Max Neighbors, Variable Rate |

| Looping | Loop On/Off, Variable Loop Steps |

| Harmony | Scale Selection, Chord Progressions |

| Scalability | Multi-Unit Synchronization, Distinct Parameters per Unit |

Conclusion

This project presented the development of the TZ5 system, a unified audio-visual music creation tool that integrates Tonnetz-based harmonic mapping, cellular automata, and IoT tools. The project demonstrates how iterative enhancements and user-centric design can lead to a versatile tool for music creation and exploration.

Future developments aim to include detailed usability evaluations, quantitative analysis using tools like Music21, a library of presets, enhanced interactivity via gesture control, and educational applications. Ongoing work also includes building a massive 3-meter diameter panel to significantly expand the scale of the visual display.